Not Z-Image-Base, but Z-Image-Omni-Base

Not Z-Image-Base, but Z-Image-Omni-Base

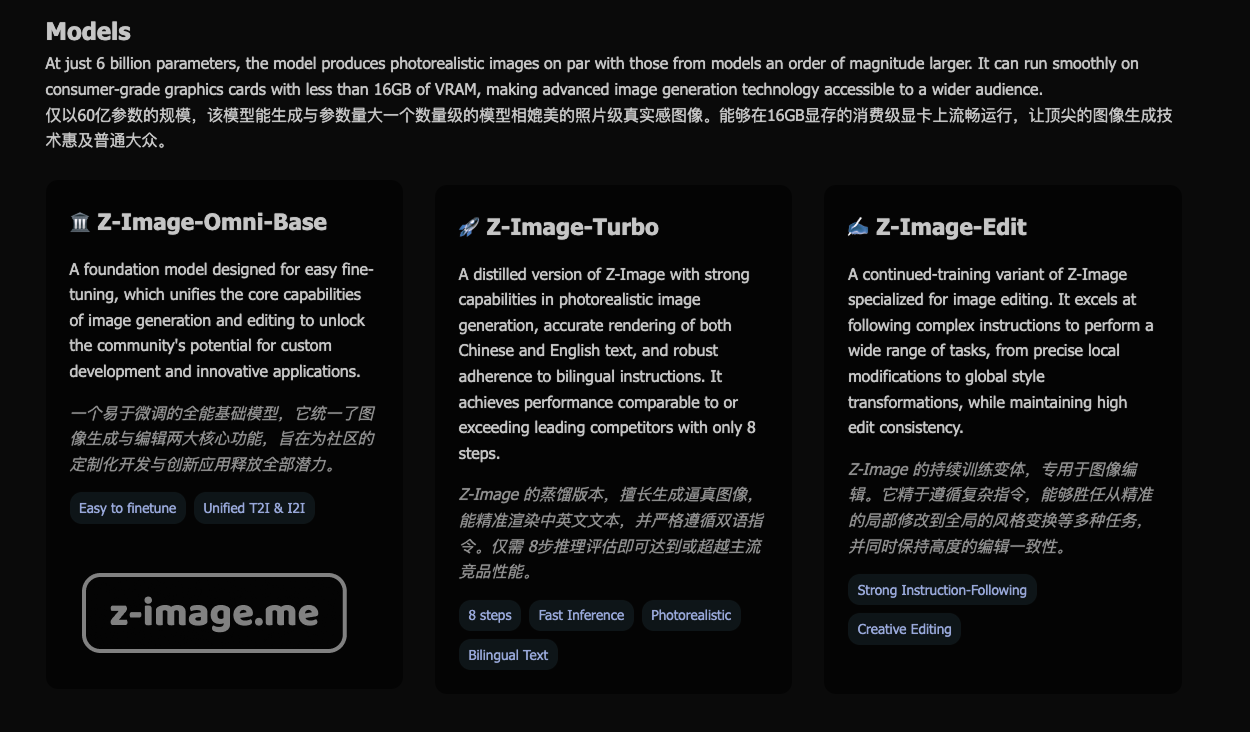

In the rapid evolution of AI image generation technology, Alibaba's Tongyi-MAI team's Z-Image series models stand out with their efficient 6B parameter scale and photorealistic quality. However, the author recently observed on the Z-Image official blog that the original Z-Image-Base has been quietly renamed to Z-Image-Omni-Base (ModelScope and Hugging Face have not yet updated as of publication). This name change is not a simple label adjustment, but symbolizes a strategic shift in model architecture towards "omni" (omnipotent) pre-training: it emphasizes the ability to uniformly handle image generation and editing tasks, avoiding the complexity and performance loss of traditional models when switching tasks. Through the integration of omni pre-training pipelines for generation and editing data, this transformation means that Z-Image-Omni-Base goes further in parameter efficiency, supporting seamless multimodal applications such as cross-task use of LoRA adapters, thereby providing developers with more flexible open-source tools and reducing the need for multiple specialized variants.

The Rise of Z-Image Series: Evolution from Base to Omni

The core architecture of the Z-Image series is the Scalable Single-Stream Diffusion Transformer (S3-DiT), with all variants adopting a unified input stream design that processes text, visual semantic tokens, and image VAE tokens in series. This enables the model to excel in multilingual (Chinese and English) text rendering and instruction following. According to the latest technical report (arXiv:2511.22699, released December 1, 2025), omni pre-training is a key innovation that unifies the generation and editing processes, avoiding the redundancy of dual-stream architectures. In community discussions, this omni feature has prompted users to call the base version Z-Image-Omni-Base, highlighting its omnipotence rather than being merely a generation base model.

The latest developments show that Z-Image-Turbo was released on November 26, 2025, with weights open-sourced on Hugging Face and ModelScope, and online demo spaces provided. In contrast, the weights of Z-Image-Omni-Base and Z-Image-Edit remain in "coming soon" status (no GitHub repository updates after November), and the community expects this delay is related to further optimization of omni functionality. User feedback (such as Reddit discussions) appreciates Turbo's sub-second inference speed (on H800 GPU, supporting 8-step inference and CFG=1), but also points out that Omni-Base's unified capabilities have more advantages in complex tasks, such as generating diverse images (like ingredient-driven dishes or mathematical charts) and supporting natural language editing without model switching.

Version Comparison: The Unique Positioning of Omni-Base

To clarify the meaning of the name change, we compare the series variants. All models share 6B parameters and a single-stream architecture, but Omni-Base's omni pre-training enables seamless transition between generation/editing, which is seen in the community as the essential transformation from "Base" to "Omni-Base": it not only improves versatility but also allows fine-tuning such as LoRA to be applied in a unified framework, avoiding the separate training of generation and editing as in Qwen-Image.

| Feature/Aspect | Z-Image-Turbo (Distilled) | Z-Image-Omni-Base (Base Omni) | Z-Image-Edit (Editing) |

|---|---|---|---|

| Main Capabilities | Fast generation, multilingual rendering; sub-second speed. | Unified generation/editing; high diversity and realism, supports omni LoRA. | Precise editing, strong instruction following. |

| Speed & Requirements | Fastest, consumer GPU (<16GB VRAM) support. | Slower but highly flexible; requires >20 steps inference. | Medium, focused on editing efficiency. |

| Benchmark Performance | Open-source SOTA, leading in Alibaba AI Arena. | Quality superior to Turbo, but no benchmarks released; omni training improves versatility. | Editing precision outstanding, avoids drift. |

| Advantages | Suitable for rapid iteration; wide community tool integration. | Omni pre-training seamless task switching; unified alternative to Qwen-Image. | Creative repainting, respects constraints. |

| Disadvantages | Editing requires custom workflows; occasional detail deficiencies. | Images may have "AI generalization" style; special features like nudity uncertain. | Generation not as diverse as Omni. |

| Use Cases | Concept art, news visualization. | Custom development, cross-task fine-tuning. | Image modification, precise adjustments. |

As seen from the table, Omni-Base's positioning lies in its omnipotence: community users point out that it can run on hardware like RTX 3090, supports Q8_0 quantization, and provides potential for edge features like nudity generation (although Turbo already supports it, the Omni version requires LoRA unlocking). Compared to larger models like Qwen-Image (20B), the Z-Image series is more efficient, but Omni-Base is competitive in detail and high-frequency rendering through Decoupled-DMD and DMDR algorithms.

Development and Future: The Potential of Omni Pre-training

The Z-Image series is developed by Alibaba's Tongyi-MAI team, focusing on parameter efficiency and distillation techniques. The introduction of omni pre-training marks a shift from task-specific models to a unified framework, and this name change (already popular in the community) heralds future trends in the open-source ecosystem: fewer variant splits, stronger task compatibility. Currently, Turbo is fully available, while Omni-Base and Edit development is complete, with weight release delays possibly related to optimization. Community contributions are active, including stable-diffusion.cpp integration (supporting 4GB VRAM) and speculation about video extensions, though not officially confirmed.